Rack awareness feature in Couchbase

In distributed database systems, data is distributed across many nodes. If you consider Cassandra, it is not uncommon to have a cluster (in Cassandra terms, a ring) with 1000 nodes or even more. These nodes are then grouped into different racks, in cloud terms, different availability zones. The reason is, in the event that a whole rack (availability zone) goes down since the replica partitions are on separate racks, data will remain available.

When it comes to Couchbase, the so-called rack awareness feature is controlled by using Groups. You can assign Couchbase servers into different Groups to achieve the rack awareness capability.

If you are provisioning a Couchbase cluster on AWS, you can create the server Groups analogous to the availability zones on AWS. This logical grouping in Couchbase allows administrators to specify that active and replica partitions be created on servers that are part of a separate rack zone.

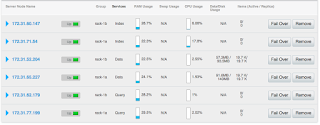

See below figure-1 and notice the Couchbase cluster deployed on AWS has two server groups similar to the availability zone.

This cluster has two nodes for each service offering for data, index, and query. The servers are logically grouped into two groups, rack-1a and rack-1b which is similar to availability zone 1a and 1b on AWS respectively. As a result, servers are physically arranged in two racks.

It is recommended to have the number of servers same between the server groups. If there is an unequal number of servers in one server group, the rebalance operation performs the best effort to evenly distribute replica vBuckets across the cluster.

The rack awareness feature is available only in Enterprise Edition of Couchbase.